In March 2021, the FBI warned that deepfakes could be the next major cyber threat. On what grounds? According to the federal law enforcement agency, the threats include synthetic corporate personas or sophisticated simulations of any existing employee. What are the consequences for small businesses?

Although deepfakes currently do not play a significant role in social engineering scenarios, even the World Economic Forum began to pay more attention to the technology, the application of which is growing in volume and becoming increasingly sophisticated – and marked this trend as something that could potentially damage businesses. Many other experts share that view. According a report conducted by CyberCube, deepfakes could become a major threat to businesses over the next two or three years.

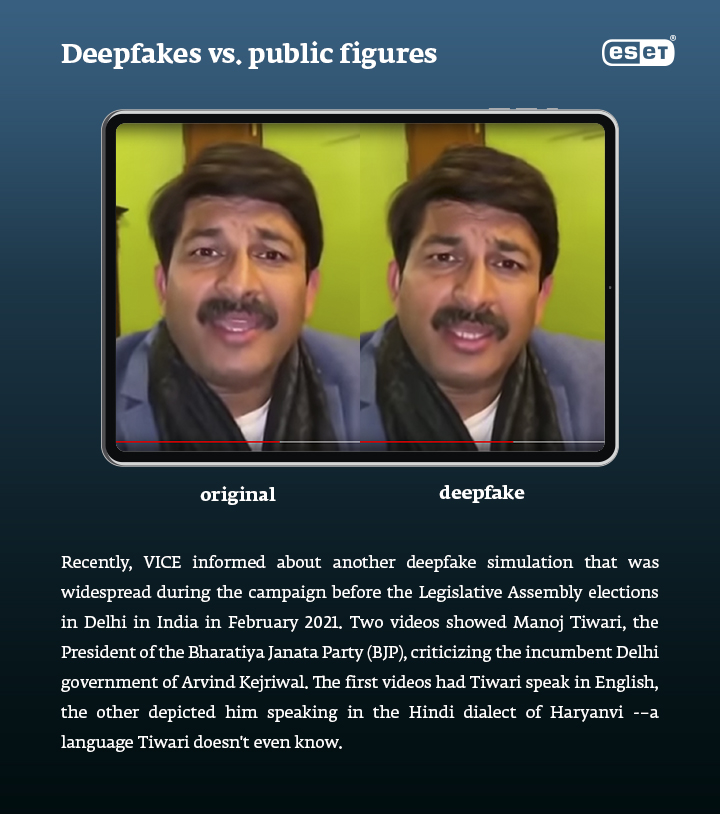

Deepfakes are becoming easier to create, requiring fewer source images to build them, and are increasingly being commercialized. Get a grasp on the possible use of this technology in terms of social engineering. Where could deepfakes pose a risk?

1) A very convincing form of impersonation

One of the first cases of this technique occurred two years ago. In August 2019, cybercriminals fooled a company into making a $243,000 wire transfer using an AI-powered deepfake of its CEO’s voice. Later, the victim said that the caller had convincingly replicated his employer’s German accent and tone of voice. In this case, the cybercriminal didn’t have to put any effort into making a video – he only needed to make one phone call.

While many popular deepfake videos are complete face swaps, another possibility is to create a deepfake from an existing video. With a “lip-sync” deepfake algorithm, you can use a real person’s audio recording in order to train the algorithm to translate the sounds into basic mouth shapes. This technology could potentially be used to put words into the mouths of CEOs, important suppliers, or any employee simply asking his or her colleagues to transfer money or share login credentials. In the meantime, these attacks will probably target executives to damage a company’s reputation.

Want to know more about this type of attack? Read our article Impersonation: When an Attacker Is Posing as the CEO.

2) Sextortion at its very worst

As VICE recently stated, since deepfakes blew up in 2017, the technology has been used extensively to create fake adult content using existing celebrity video footage and AI algorithms. But there have already been several cases in which common people (mostly women) were inserted in such fake videos and then blackmailed. They didn’t even have to do anything wrong – until the true origin of the video is revealed, the content can cause serious pain and reputation damage.

However, there’s no point in scaring your staff with these examples. Rather, explain to them why it is important to be careful about the information they share online, now more than ever. Presently, the credibility of fake videos depends on the number of photos and videos used by the software to create a simulation. Thus, anyone can start building a defense by keeping an eye on his or her digital footprint and the number of photos shared online.

3) New scenarios people cannot debunk

According to Cyberscout, one of the factors limiting the spread of deepfakes is that scammers don’t need them yet. For now, hackers are still well off with existing types of social engineering attacks, which can also be interpreted as gaps in cybersecurity training across companies. But when the majority of businesses get to the point at which they train their staff regularly, and multi-layered cybersecurity protection becomes a matter of course, the most effective way to get people’s attention and force them to do something is to pretend you are someone trustworthy and make them believe they are doing the right thing.

How not to fall for a deepfake scam

To protect yourself and your company from the effects of such frauds, it is good to realize in what situations these attacks might occur. The FBI says that we should expect new attack scenarios in situations like remote meetings and take steps to train employees, teaching them effective verification techniques and recognizing flaws that can be spotted in the deep-faked photos or videos. Even though the FBI and IT experts believe the barriers for scammers who want to create convincing digital fraud will inevitably diminish, this training can at least help IT users to better understand the context in which deepfakes appear and what they can do.

Tools like the verification platform from the organization Sensity.AI or Reality Defender, a browser plug-in for detecting fake videos, can be used to debunk deepfakes. There are also several security measures you can implement to prevent the manipulation of your content or sensitive data, like adding noise pixels to your videos to disable modifications, or analyzing frames or the acoustic spectrum in order to detect any distortions in deep-faked videos.

Deepfakes are slowly starting to test human resilience, so it's time to boost your IT immune system.